When we set out to build the first version of the NodeZero MCP Server, we had two guiding principles:

- Agents need the attacker’s perspective to be useful.

- Any new surface we expose must be secure by design, not by policy.

Most Model Context Protocol (MCP) servers today are overpowered and underdefended. They often:

- Expose orchestration hooks never meant for LLMs

- Dynamically invoke arbitrary tools

- Bind to network ports that are easy to scan and exploit

We didn’t build ours that way.

The NodeZero MCP Server is a constrained, API-native runtime designed to give agents safe, structured access to NodeZero’s GraphQL fields. Turning the Horizon3.ai platform into a programmable offensive security engine that fits right into your existing agentic AI workflows without creating new attack surfaces.

Security by Architecture, Not Just Policy

From day zero, we architected the NodeZero MCP Server to inherit our GraphQL API’s permission model and minimize runtime risk.

- API-native permissions

- All actions go through our GraphQL RBAC model — the same controls that protect our UI and CLI.

- Tokens and roles are scoped to the user who generated the API key.

- A potential JWT misuse vulnerability (confused deputy risk) was identified–ensure only SINGLE USER per MCP Server

- For SSE/HTTP users of the locally hosted version, whoever most recently authenticated to the locally deployed MCP server will be the identity that everyone will assume until that stored JWT expires and a new auth flow occurs. To prevent abuse, use stdio or deploy a NodeZero MCP server per user/API Key

- Runtime isolation

- The first version runs locally only, with stdio transport as the preferred, secure mode— no network binding, no sockets, no HTTP listener.

- Using the SSE/HTTP mode for the locally hosted version, presents a security risk in guaranteeing runtime isolation.

- Designed to run locally, which limits exposure to local environments.

- Future H3-hosted MCP versions will add OAuth-based enterprise auth without requiring API keys in IDE settings.

- The first version runs locally only, with stdio transport as the preferred, secure mode— no network binding, no sockets, no HTTP listener.

- Minimal surface area

- The server only exposes two tools:

- GraphQL Schema Introspection – for structured API discovery

- GraphQL Query Execution – for permissioned data access

- LLMs can reason and query, but they can’t execute arbitrary commands.

- The server only exposes two tools:

Hardening Against Common MCP Attack Vectors

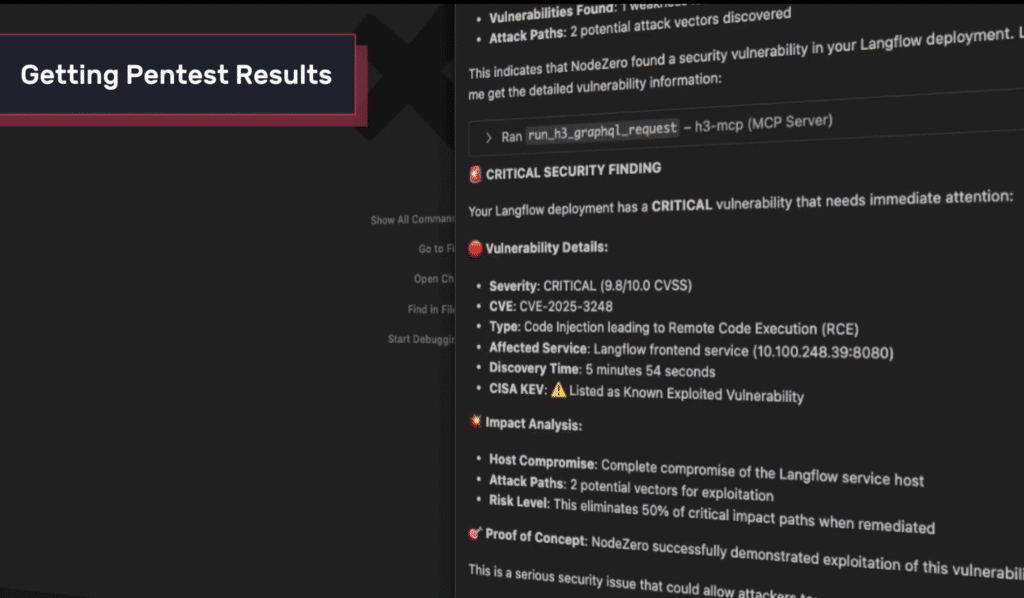

We approached MCP security like offensive operators — because offensive research shaped our approach. Security researchers have demonstrated how MCP servers can be exploited through:

- Prompt injection & tool poisoning

- Command injection in insecure toolchains

- Network-exposed servers enabling remote RCE

- Confused deputy and cross-context attacks

How Research Shaped Our Architecture

Every security control in the NodeZero MCP Server maps directly to a demonstrated threat:

- Prompt injection & tool poisoning → Two-tool-only model (schema introspection + GraphQL query) with no shell or dynamic plugins

- Command injection via toolchains → No subprocess execution, only GraphQL queries

- Network-exposed exploits → Self-hosted runtime with no remotely accessible ports (stdio only)

- Confused deputy & context bleed → Scoped API keys, strict RBAC enforcement, and isolated execution loops

In short, we treated offensive research as a blueprint for defensive engineering, baking security into the architecture from day zero.

Recommended Inputs to Further Harden Self-Hosted Deployments

The NodeZero MCP Server leverages a single required input for local deployments:

```json

CopyEdit

"inputs": [

{

"type": "promptString",

"id": "h3_api_key",

"description": "H3 API Key",

"password": true

}

]Why This Input Is Defined

We included h3_api_key as a password-masked promptString input to guide secure handling of secrets in local MCP deployments:

- Supports runtime-only secret entry

- If left blank in IDE settings, the user is prompted interactively.

- The key only exists in memory during the container session.

- Reduces exposure when combined with Docker

-e- The API key never persists in images or disk-based configs.

- Flexible IDE-driven launches

- Keys can be provided securely via IDE prompt or Docker runtime without modifying source files.

Important: If a user hardcodes the key in MCP.json settings, it will persist in plaintext on disk. For the most secure setup, leave the input blank and use runtime injection.

Best Practices for Secure Use of Inputs

- Use stdio mode for locally hosted MCP servers!!

- Treat API keys like sensitive passwords

- Store them with the same caution as any production credential.

- Do not check them into source control or leave them in plaintext files.

- Provide keys at runtime whenever possible

- Use IDE prompts or Docker

-e H3_API_KEYto inject the key on launch.

- Use IDE prompts or Docker

- When in doubt, generate a new key in the Horizon3 Portal

- If a key may have been exposed, immediately create a new one and revoke the old key in the Portal.

- This is the simplest and most reliable method to eliminate risk from accidental disclosure.

- Isolate the runtime container with no shared host volumes or persistent mounts.

- Clean up containers after use to ensure no memory-resident secrets linger.

- Using SSE/Streamable HTTP w/ the locally hosted MCP server does not support runtime isolation and JWT tokens tied to a session ID.

- Audit GraphQL activity and rotate keys regularly to minimize exposure and verify keys are only being used as expected.

By leveraging password-masked runtime inputs, we ensure ephemeral secret handling and prevent long-lived keys from lingering in source control or local config files.