Automation built on vulnerability scanner output, CVE databases, and CVSS scores fails when agents prioritize critical-rated vulnerabilities that aren’t exploitable while missing medium-severity weaknesses leading to domain admin. You’re drowning in findings but can’t confidently decide what to fix first—or prove you fixed it correctly. Enterprises already invested heavily in GenAI across business units. Security can leverage these investments to blend exploitability validation with business context, transforming from scanner triage centers to systematically closing proven attack paths through continuous verification.

The Exploitability Foundation

When vulnerability scanners complete, your AI agent gets a list of potential issues—misconfigured servers, exposed services, missing patches—each with CVE data, CVSS scores, and generic impact descriptions. None of this tells you what an attacker can actually do in your environment.

Autonomous pentesting provides the missing context by attempting actual exploitation. Instead of “Cisco ASA has CVE-2025-20362, CVSS 9.8,” workflows get proof of exploit, the exact attack chain, downstream impact, threat actor mapping, and environment-specific fix actions.

What Exploitability Data Looks Like

When querying NodeZero for exploitability intelligence, workflows receive actionable offensive security context like this:

Asset: CISCO-ASA-01

Weakness ID: CVE-2025-20362

Proof of Exploit: Successful authentication bypass leading to remote code execution

Attack Chain: Unauthenticated access via authentication bypass → command injection → credential harvesting from configuration files → lateral movement to internal network segments

Downstream Impact: Direct path to 3 domain controllers, 12 file servers containing financial data, SQL database cluster with customer PII, access to internal network from perimeter firewall position

MITRE ATT&CK TTPs: T1190 (Exploit Public-Facing Application), T1078 (Valid Accounts), T1003 (Credential Dumping), T1021 (Remote Services)

Fix Actions: Apply Cisco security patch immediately, enable multi-factor authentication on administrative access, segment ASA/FTD appliance from critical infrastructure, rotate credentials for service accounts accessible from appliance, implement network monitoring for anomalous traffic patterns from firewallThis data moat—the distinction between theoretically vulnerable and provably exploitable—becomes the foundation for confident automation.

With this data foundation established, let’s examine how workflows evolve when you add it.

From Triage to Systematic Remediation: The Evolution

The Legacy Manual Flow

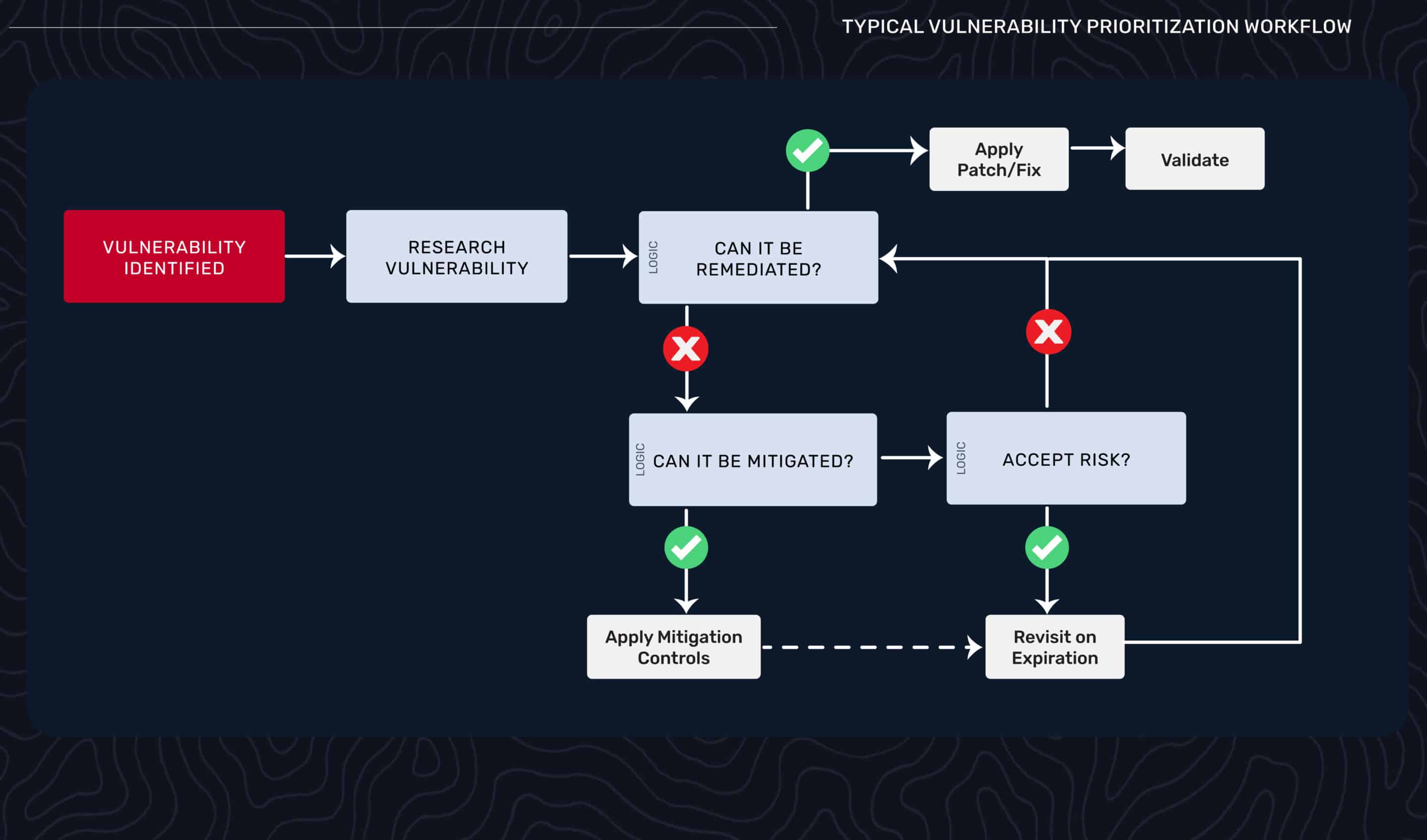

Here’s what vulnerability prioritization looked like before agentic AI. A scanner identifies a vulnerability. A human researches it—checking CVE databases, looking for exploit code, reading vendor advisories. They assess whether it can be remediated with a patch, if it requires mitigation controls, or if the organization needs to accept the risk. After remediation, another human validates the fix worked. Then the cycle repeats.

The bottleneck: humans in every decision loop because you can’t trust the data quality. Is this vulnerability actually exploitable? Will this patch close the attack path? You don’t know, so you can’t automate. The workflow gates on human expertise at every critical junction.

Agentic AI begins to change this picture.

Agentic AI Without Exploitability Data

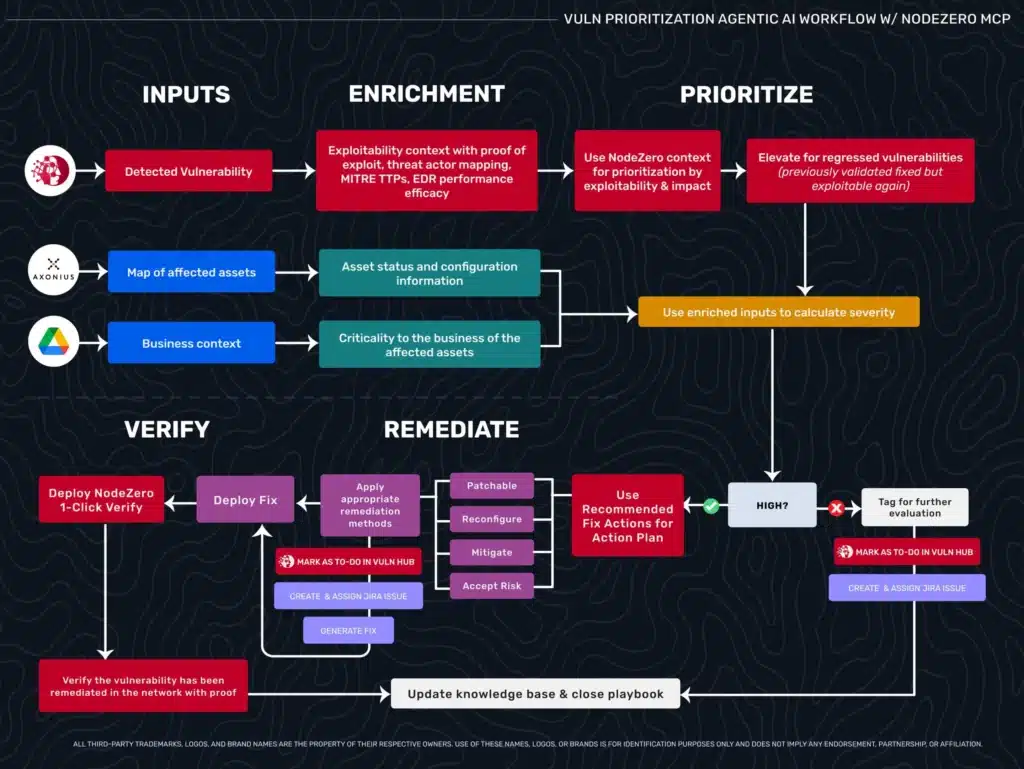

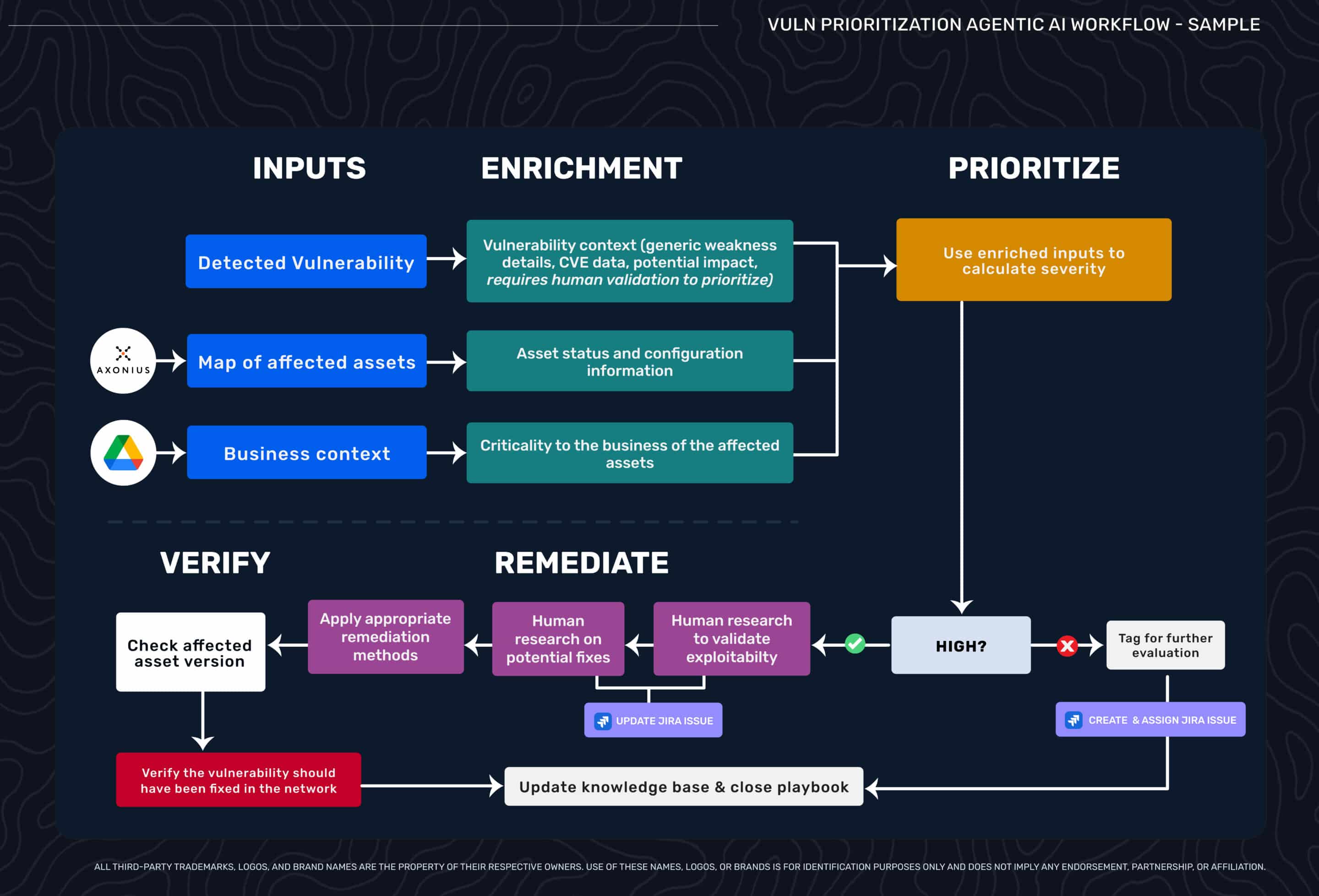

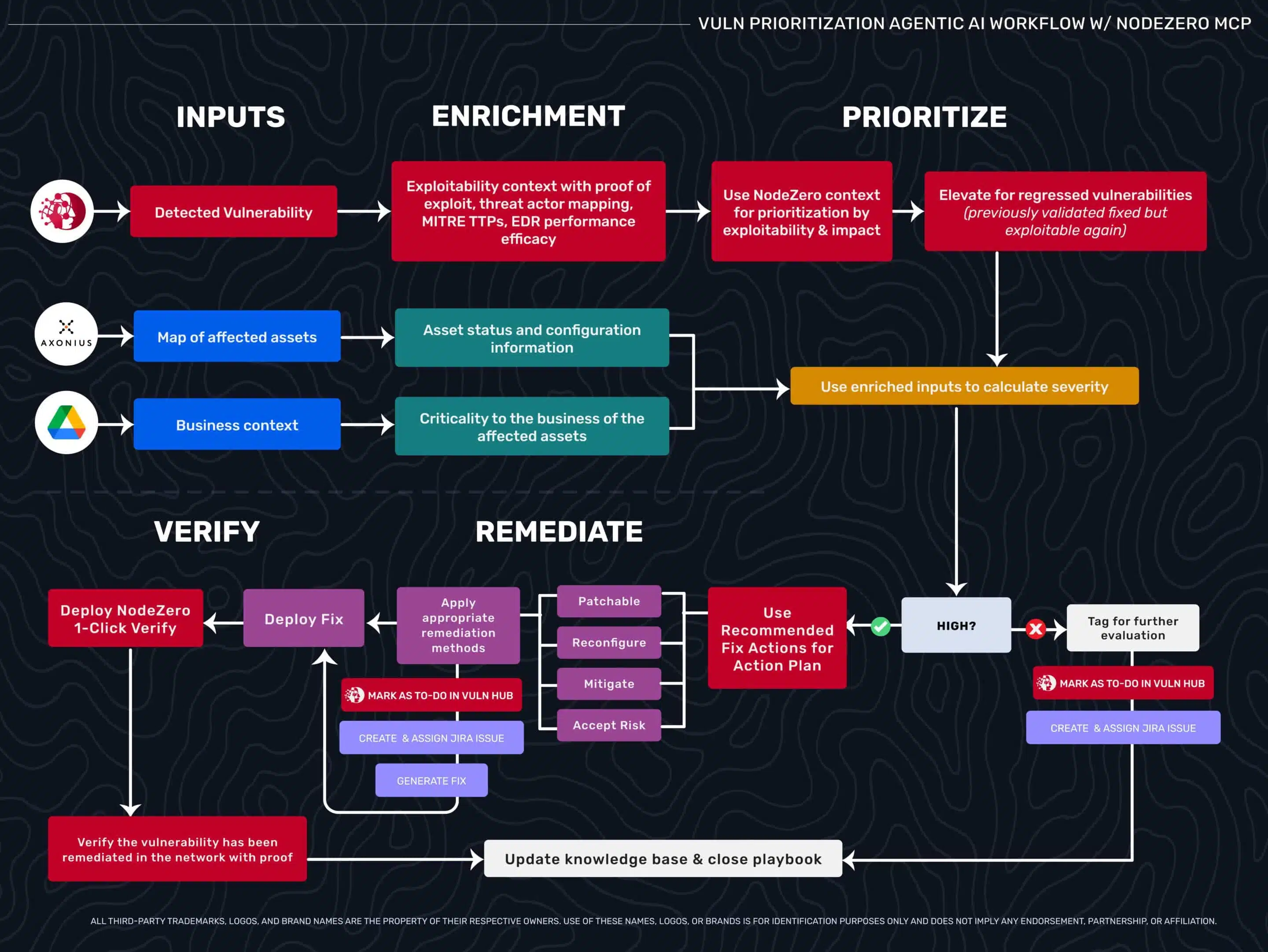

Agentic AI accelerates this workflow by automating the research and enrichment phases. When a vulnerability is detected, AI agents automatically map affected assets across your infrastructure, pull configuration information from asset management systems, and gather business context from project management platforms and financial forecasting tools. Agents query vulnerability databases for CVE details and potential impact.

This speeds up triage significantly. Multiple MCP servers work together to enrich findings with different dimensions of context. Asset management systems provide technical configuration details. Business intelligence platforms surface information about asset criticality and organizational priorities. Vulnerability databases contribute generic CVE scoring and theoretical impact assessments.

But the core limitation remains: the workflow still requires human validation of exploitability and human research to determine if a fix worked. The agent automates data gathering from multiple sources, but humans still make critical decisions because the underlying data—CVE scores, generic descriptions, theoretical impact—doesn’t tell you what’s actually exploitable in your specific environment.

Contextual Prioritization: MCP Servers Sparring on Risk

Here’s where the MCP ecosystem reveals its true power: multi-dimensional decision-making through multiple context sources working together.

Consider two assets with identical exploitable vulnerabilities. Both have the same weakness. Both show the same downstream impact in terms of technical access—let’s say privilege escalation leading to domain admin. From a pure offensive security perspective, they’re equally critical. But security risk doesn’t exist in a vacuum.

Asset management reveals Server A hosts a development environment with no production dependencies. Server B hosts staging infrastructure for a customer demo tied to a major contract—15% of next quarter’s revenue. Compliance databases flag regulatory requirements on Server B, adding legal exposure. Identical vulnerabilities, identical downstream impact, vastly different business implications.

In this case, you’re synthesizing intelligence from across your organization—asset inventory, project management, financial planning, compliance databases, autonomous pentesting—into unified priority scores that blend proven attack paths with business priorities.

Now add the final piece: autonomous verification.

Transformation Through Exploitability + Verification

Enrichment with Proof of Exploit

Instead of generic CVE data, workflows query autonomous pentesting platforms like NodeZero for exploitability context: proof of exploit, attack chain, downstream impact, fix actions tailored to your configuration, MITRE ATT&CK TTPs, and threat intelligence on active exploitation. This offensive security foundation transforms what’s possible when combined with organizational context.

Prioritization Using Multi-Dimensional Context

This is MCP servers sparring on risk—each contributing different dimensions that combine into confident decisions. Two vulnerabilities might affect equally critical business systems, but only one has proof of exploit with a direct path to domain admin. That gets elevated. Another scenario: two vulnerabilities both have proof of exploit with identical downstream impact, but one affects infrastructure supporting a critical product launch tied to significant revenue. That business context tips the scales. Your agent synthesizes this intelligence into unified priority lists, automatically marking high-priority items for immediate action.

Verification Through Autonomous Retesting

Here’s where the workflow extends into territory you couldn’t automate before—the mini Find-Fix-Verify loop.

After remediation, instead of hoping the fix worked or scheduling manual validation weeks later, the workflow uses recommended fix actions to deploy changes and immediately triggers verification testing. Autonomous pentesting platforms with rapid retesting capabilities (such as NodeZero’s 1-Click Verify) rerun the same exploitation attempts against the remediated asset.

If the attack path is closed, the platform returns proof of non-exploitability. The workflow updates your ticketing system with evidence that the fix worked—not just “patch deployed” but “re-exploitation failed, attack path verified closed.” The ticket closes automatically with evidence.

If the weakness is still exploitable, the loop continues. The platform provides additional context about why the first remediation attempt failed. Maybe the patch was applied but authentication wasn’t enforced, or the service was updated but credentials weren’t rotated. The workflow regenerates the fix incorporating this feedback, redeploys, and retests.

Only when verification produces ambiguous results or fails after multiple attempts does the workflow escalate back to human review. You’re automating with human oversight by exception rather than validation at every step.

When Immediate Remediation Isn’t Possible

The verification loop assumes patches exist. When they don’t—legacy systems, vendor dependencies, business constraints—workflows automate compensating controls instead.

For example, this workflow deploys honey tokens (NodeZero’s Tripwire) on affected assets to detect exploitation attempts. The workflow also generates EDR/SIEM rules for the observed attack techniques, routing to analysts for approval. For segmentation, it implements network policies automatically if IaC controls exist, otherwise it generates firewall rules with business impact analysis for approval.

Mitigation status tracks alongside remediation tickets. Board documentation becomes: “Can’t patch due to vendor dependency. Deployed Tripwire to detect exploitation attempts. Implemented segmentation reducing blast radius from domain admin to isolated segment. Monitoring with tuned EDR rules for this attack chain.” Compensating controls are backed by offensive validation.

The FixOps Operating Model

Consider the operational transformation. A new CISA KEV drops. Your agent immediately queries asset inventory for affected systems and checks autonomous pentesting platforms for existing exploitability validation. If those systems were tested, you instantly know whether you’re exploitable. If not, the platform launches targeted tests and provides proof within moments—unlike traditional pentesting that requires scheduling a new engagement delay action by weeks if not months. For exploitable findings, the agent queries business context sources for operational impact, generates remediation using environment-specific fix actions, deploys through CI/CD, and triggers verification tests. When verification confirms the attack path closed, the agent logs proof and closes incidents. Automatically. With evidence.

The infrastructure exists. Enterprises already have AI investments across business units. Autonomous pentesting platforms validate exploitability at scale and autonomously verify remediation. MCP servers make this intelligence accessible to your workflows. What remains is implementation—connecting the pieces to build workflows that systematically fix proven attack paths with confidence to automate remediation decisions. This is how you build workflows that transform your AI ecosystem from noisey-automation centers to decision-making engines that actually drive your organization to prioritize and fix what matters.

Architectural Considerations: For teams implementing these workflows in production, here are key architectural patterns to consider.